We’ve all had live streams with family, friends, coworkers, or clients where poor internet connectivity results in one person repeatedly dropping in and out. And once they’ve rejoined, there is guaranteed to be at least a minute of “Hey, you’re back. Can’t hear you though . . . Nope. It’s a little fuzzy . .… Continue reading Accommodating Low Bandwidth Live Streaming

We’ve all had live streams with family, friends, coworkers, or clients where poor internet connectivity results in one person repeatedly dropping in and out. And once they’ve rejoined, there is guaranteed to be at least a minute of “Hey, you’re back. Can’t hear you though . . . Nope. It’s a little fuzzy . . . That’s better. OK, not sure where I lost you, so I’ll just start over.”

Even after the connection seems to have stabilized, it’s all but guaranteed to freeze up right as someone is asking an important follow-up question. Dealing with low-bandwidth live streaming can be a frustrating experience.

Of course, it’s one thing when your mom drops off a video call. You can just call her later to find out what the neighbors have been up to. (It’s likely you should be calling more often anyways.) Dropped connections with live-streaming applications such as event broadcasts, drone surveillance, esports, or live auctions, however, can have more dire consequences.

A common way to deal with poor connectivity is to add a buffer to allow everything to catch up. However, the buffer adds latency, delaying the time between capturing the video and delivering the video stream to a subscriber. While this works for VOD-type use cases, it won’t work with live video — life won’t pause for your internet connection to catch up.

Another solution is to send a low-quality stream to everyone. Lower quality video consumes less data and is therefore easier to process, facilitating transmission especially on CPU-constrained mobile devices. Unfortunately, that approach downgrades the experience for all participants, including those with high-speed connections. Although a 480p stream looks fine on a mobile device, watching the same stream on a desktop monitor does not.

The challenge is determining the best approach for each user — that is, delivering high-quality streams to users with adequate bandwidth and processing power, while also accommodating those with suboptimal speed and power.

Adaptive bitrate streaming (ABR) is the best way to handle connectivity issues. A typical ABR setup employs a transcoder to create multiple streams at different bitrates and resolutions. This is usually done with media server software or hardware encoders, which sends multiple streams to a selective forwarding unit (SFU). The SFU selects the best stream for each client — in case of a basic implementation the stream sent is based only on the client’s bandwidth; the best solutions, however, will also consider the client’s display size and CPU capability when determining which stream to send.

Multiple renditions allow for playback on different bandwidths. The media server then efficiently sends the highest-quality stream possible for each viewer’s device and connection speed.

What about latency? Doesn’t all that extra processing introduce a delay?

ABR, WebRTC, and Real-Time Latency

As we’ve outlined in our whitepaper, WebRTC is the only way to achieve real-time latency. Thus, in order to deal with low bandwidth and latency, an effective solution has to integrate both.

Red5 Pro has implemented adaptive bitrate (ABR) streaming with WebRTC on the server side (unlike simulcast, which implements a similar method on the client side). We’ve done this by creating multiple resolutions so that the server can automatically select the best quality for the client’s current internet speed and device limitations. ABR allows dynamically downgrading and upgrading the stream quality based on those network conditions. That way each user can enjoy the smoothest possible live-streaming experience without doing anything themselves.

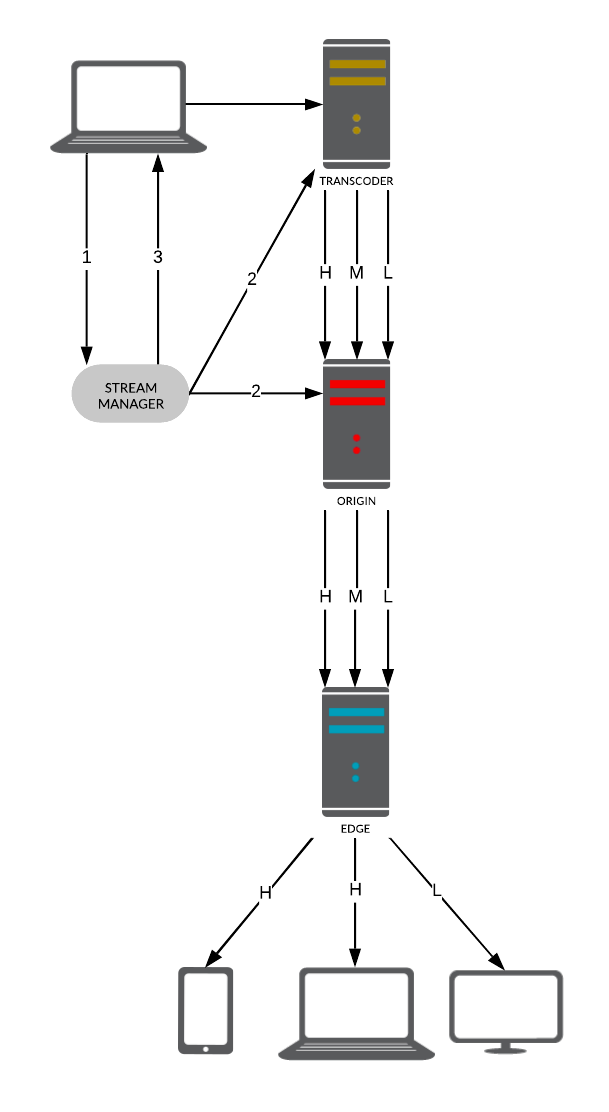

The process involves publishing a single high-quality stream to a transcoder node which then generates multiple variants at lower quality with configurable bitrate and resolution settings. Many applications will use three variants — high, medium, low — although any number can be generated. These variants are then streamed to an origin that re-streams to the edge server(s). As with all of Red5 Pro’s setups, the entire process is orchestrated by a stream manager.

It should be noted that Red5 Pro also supports publishing the variants directly to an Origin without using a Red5 Pro transcoder. In that case the variants would be generated by an external encoder or process. For this post, we will focus on using a transcoder.

Publishing a Stream

The Stream Manager SSL proxy is required in order to publish with WebRTC. The proxy will transfer the publish request to the provided transcoder. This is necessary because browsers require that WebRTC broadcasts are served over SSL. Moreover, in an autoscale environment in which Red5 Pro instances are spun up and down dynamically based on load, having multiple certs could be a hassle to manage.

Specifically, publishing a stream consists of four steps (fig. 1):

- A provision is provided to the stream manager. It specifies the different variants of the stream that will be required. Then, the Stream Manager API is used to request a transcoder endpoint.

- The stream manager selects a suitable origin and transcoder. The origin is provisioned to expect multiple versions of the same stream while the transcoder is provisioned with the details of the different stream variants that it needs to create.

- The stream manager returns a JSON object with the details of the transcoder endpoint that should be used to broadcast.

- The broadcaster can then start to publish a single stream to the transcoder.

Subscribing to a Stream

Similar to publishing, subscribing with WebRTC involves using the stream manager proxy because of the SSL requirement enforced by browsers. In this case, the proxy transfers the subscribe request to the edge provided.

When a client wants to subscribe to a stream, it will have to request an edge from the stream manager. The request has to specify the stream name of the variant to subscribe to. Normally, it is best to start with a variant that is in mid-range and then let the edge make any adjustments if necessary. The edges use an RTCP message called Receiver Estimated Maximum Bitrate (REMB) to provide a bandwidth estimation. That estimate will then be used to determine what variant to serve to each subscriber based on the conditions of the network. It will continue to monitor the bandwidth and make adjustments as needed.

Once the stream manager has established a connection to the correct edge instance and the edge has selected the correct variant, the client can start watching the stream.

WebRTC’s Built-in ABR Support

Those familiar with WebRTC might know that WebRTC already supports ABR. With that in mind, you might be questioning the necessity of adding all these extra adjustments.

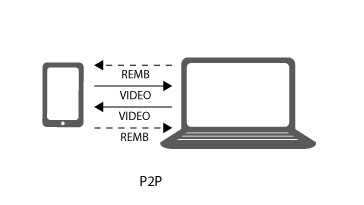

The WebRTC protocol was designed for peer-to-peer browser connections. This means that in a server-to-browser model, only the subscriber side has built-in ABR. In a one-to-one situation (fig. 2), this works fine as the publisher can just change resolutions to adjust to the single subscriber’s need.

However, when multiple subscribers are involved, things are not as simple. If the stream isn’t transcoded to multiple qualities from the beginning, then a bad network on a single subscriber would request the publisher to switch to a lower-quality stream, forcing everyone to watch a low-quality stream. You shouldn’t be punished just because someone else on the stream is mooching off their neighbor’s Wi-Fi.

Thus we implemented a transcoder to create those variants. The REMB message sent to and from the client ensures that the appropriate video is consistently delivered to each specific client without affecting other clients connected to the same stream.

ABR is a driving force behind effective live streaming experiences. It allows you to adjust to a full range of devices and connectivity speeds so that each and every user can get the best possible quality no matter their streaming conditions. This includes conducting surveillance in remote areas, simultaneously broadcasting a live stream to both web and mobile apps, or holding an international conference. The importance of maintaining a smooth connection is invaluable to effective communication.

Importantly, the Red5 Pro configuration of ABR with WebRTC prevents the stream from being forced to cater to the lowest denominator. However, that might mean that you can’t duck out of a meeting by pretending to have a bad connection.

Interested in finding out more about creating the best live-streaming experience? Send a message to info@red5.net or schedule a call. We’d love to show you all that we can do!