Scaling Challenges in WebRTC Live Streaming 1:1 != 1:1,000,000 When it comes to WebRTC, size matters. People have this assumption that if you can run a 1:1 video call then getting to a 3-way video call is just as easy. And well, if that’s the case, then 4-way video call shouldn’t be far behind. And… Continue reading Guest Post: Tsahi Levent-Levi – WebRTC Scaling Challenges

Scaling Challenges in WebRTC Live Streaming

1:1 != 1:1,000,000

When it comes to WebRTC, size matters.

People have this assumption that if you can run a 1:1 video call then getting to a 3-way video call is just as easy. And well, if that’s the case, then 4-way video call shouldn’t be far behind. And while we’re at it, 4 and 10 are relatively similar numbers – so this should definitely be just the same. Oh, and if we can do a 10-way video call, then why not 50? Or a 100?

This same approach of an interactive call between multiple users holds true for live broadcasting.

Broadcasting live 1:1 is different than 1:100 which, in turn, is different than 1:10,000 which is different than 1:1,000,000.

Two ways in which they differ:

- What infrastructure you’ll need to put in place

- How network errors get handled

Before I jump into these two aspects, it needs to be pointed out that effective live-broadcasting requires sub-second latencies. This is where WebRTC excels.

1. What infrastructure you’ll need to put in place

WebRTC requires, at the very least, 2 server types: signaling and NAT traversal (more about server types in WebRTC in this free video mini-course).

For a 1:1 live broadcast, that should suffice. We’re going to do the session in a peer-to-peer fashion anyway.

Going up to 1:100 or similar requires a media server. The point of that media server would be to receive the media from our broadcaster and then route it to the 100 other viewers.

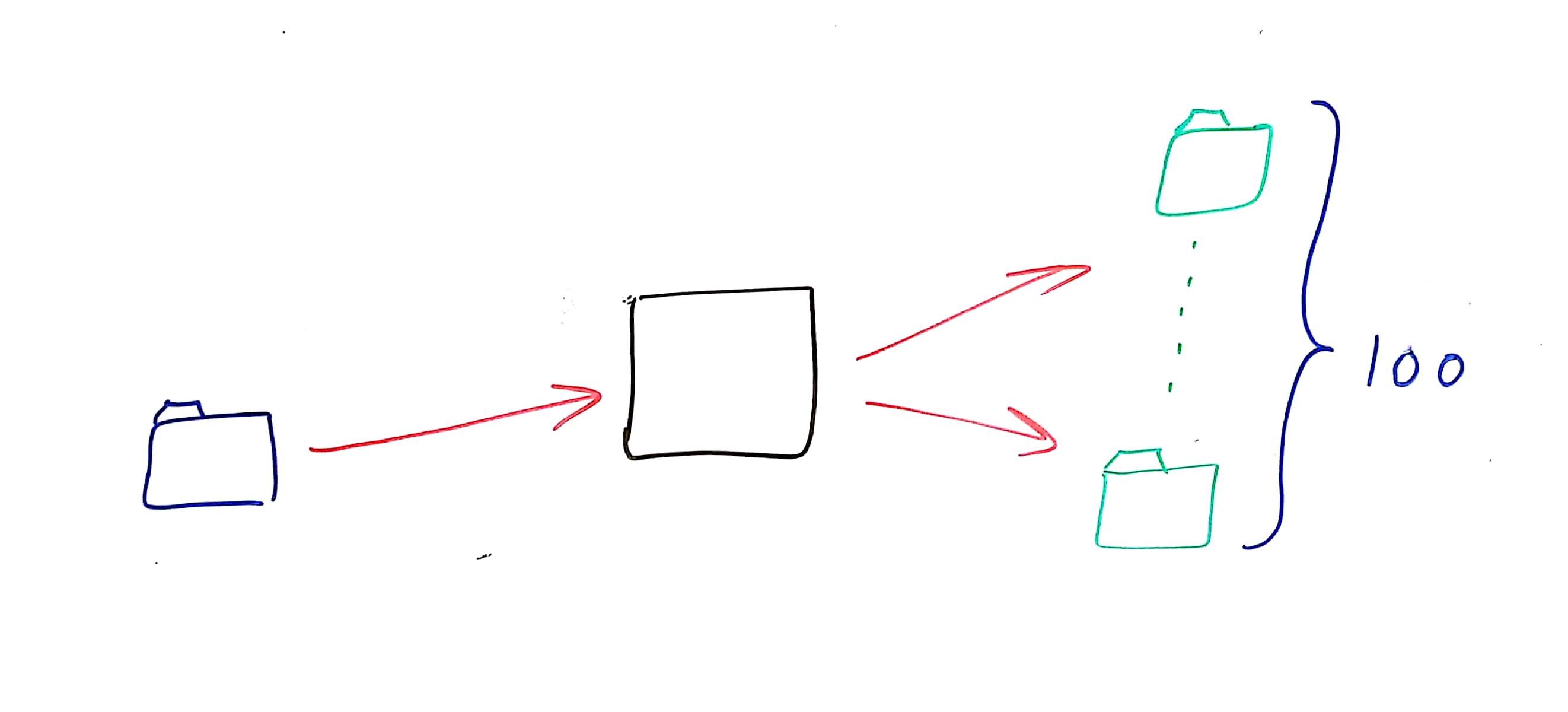

We end up with something like this for our media path:

A single server, streaming the incoming media from the broadcaster to all 100 viewers. Piece of cake.

But what happens when we grow our audience? At some point, a single server won’t be able to handle the load. It won’t fit into the amount of I/O it can handle, or the network card, etc.

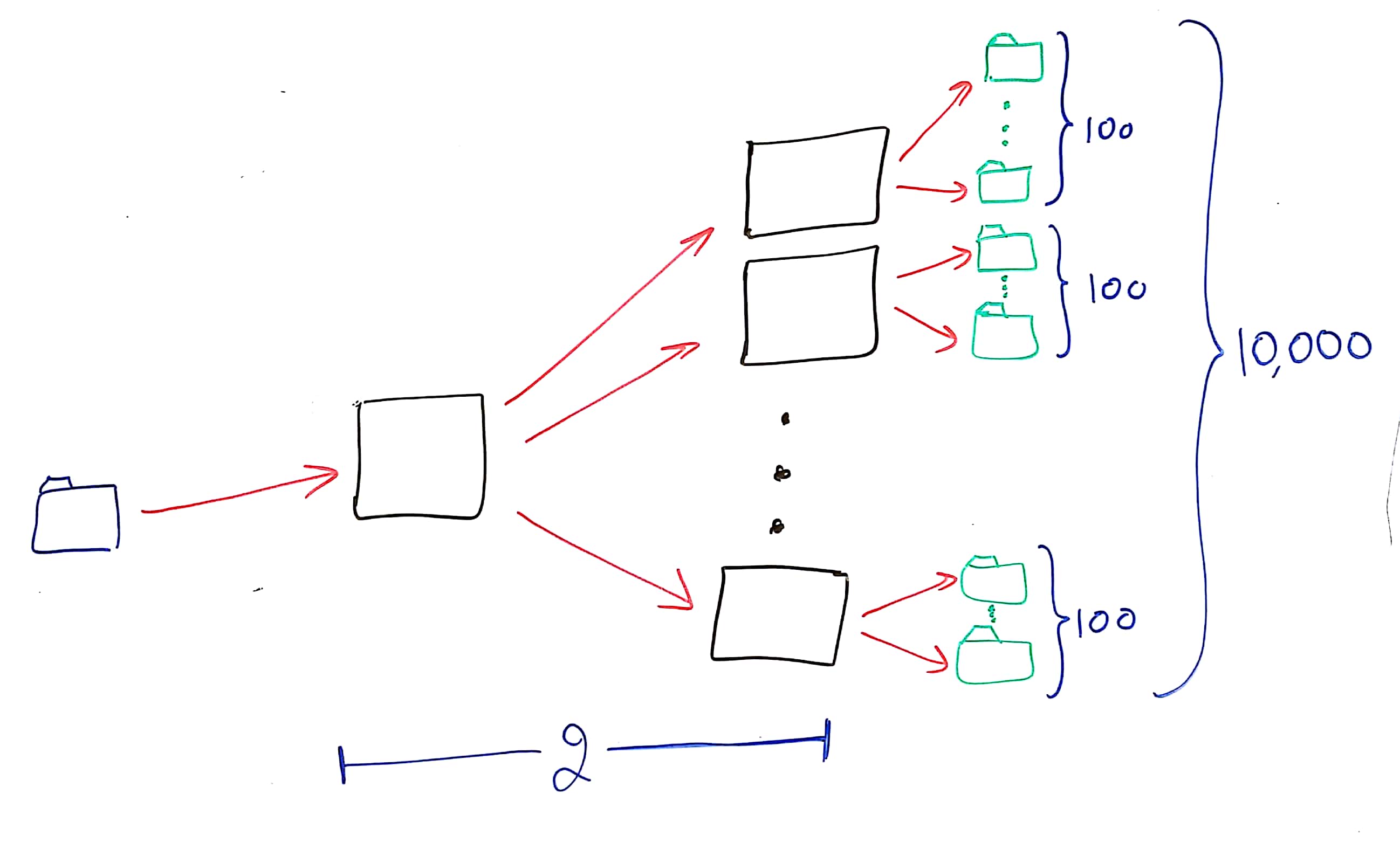

In such a case, we’ll be forced to “daisy chain” or “cascade” our media servers. So for 10,000 viewers of a single broadcasted stream, you’ll end up with something similar to this topology:

We have our “origin” server, which routes the data to a secondary set of servers who then, in turn, connect to the viewers.

It can scale nicely, but at some point, it breaks. For our own discussion here, let’s assume a single server can handle the load of 100 media streams. So our origin server can send the video to 100 other servers, which means we’re capped at 10,000 viewers for a single stream.

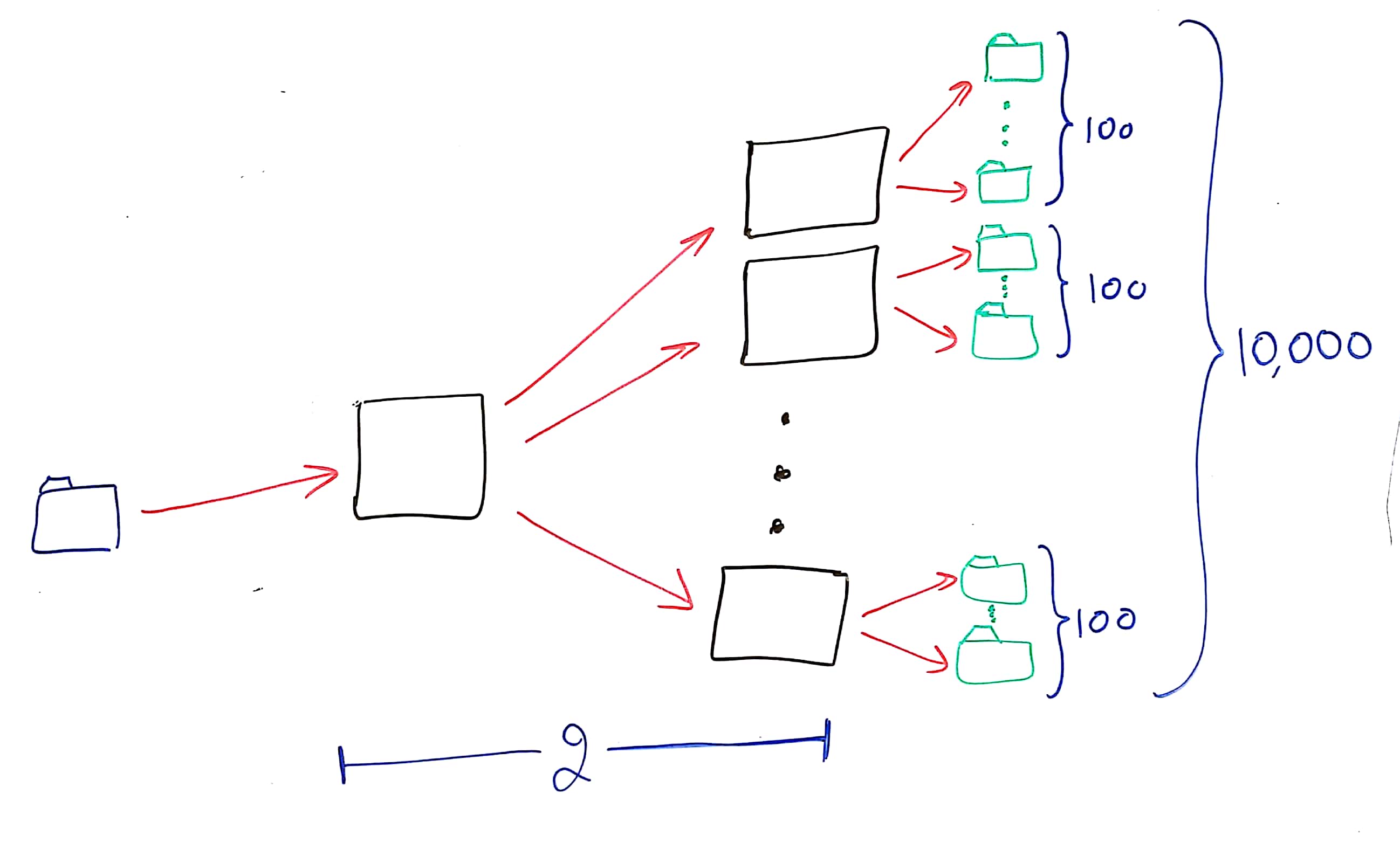

Which means we’re going to increase our daisy chain to a third level to handle a million viewers:

A few things to observe here:

- There are servers not connected to either broadcasters or viewers at all

- The more layers we add to our daisy chain, the more latency we generate (and the more moving parts we will have to handle)

Is this doable? Definitely.

Is it easy? Nope.

2. How network errors get handled

Once we’ve got our servers in place, there’s the nagging issue of how we handle network errors.

In the 1:1 case it is easy. We don’t. We let the browser handle that for us. Or the app. Whatever. The WebRTC code to do that is readily available and free (just check webrtc.org).

Going up to a 1:100 case is somewhat trickier.

There are 4 ways in which WebRTC handles network errors (packet losses):

- It identifies them, to begin with

- It can reduce or increase bitrate to accommodate to the bandwidth available

- It can resend an I-frame, to “reset” the video stream in cases of packet losses

- It can decide to add FEC to the stream

On a 1:1 call we already stated that it does that on its own, but once we add a media server, the media server has to make these decisions as well. And in our case, it needs to make it on behalf of our 100 viewers.

Going beyond 100 to 10,000 or 1,000,000 makes this even harder to deal with.

Think about this a bit. Assuming each user has on average one packet loss out of 1,000 (that’s 0.1% packet loss, which is really, really, really good, all things considered).

Increasing number of users increases the probability of packet loss. So, for 1,000,000 users, it’s fair to assume that we can reach cases where, with each packet the broadcaster sends, at least one of the viewers experiences a packet loss for it.

How do you even start handling such a thing? What logic should you employ? These types of decisions need to be taken into consideration and have to be baked into the media server implementation.

What have we missed?

I’ve simplified it all here.

There are additional headaches one needs to deal with. A few of them are:

- Viewers aren’t uniform. They come from different devices, with different capabilities and different network characteristics. How do you cater to this variance?

- Viewers may join and leave dynamically. How do you allocate them to servers, trying to maintain a high level of serviceability and user experience without breaking the bank on unused resources?

- Viewers join from different geographies. You’d likely want to allocate users to servers that are closest to them, which is another allocation problem to contend with

- There may be multiple broadcasters in your use case. Each with a different number of viewers (potential as well as real). How do you factor that into your allocation scheme?

What’s next?

Live streaming is one of the interesting use cases for WebRTC in 2017.

If you want to learn more about WebRTC, I’d like to invite you to my free webinar tomorrow. It won’t be about live streaming directly, but it will show some common mistakes people do when they try developing an application using WebRTC.

This webinar is also where I’ll be explaining my online WebRTC training – a course meant to give you the understanding and tools you need to build WebRTC applications. I am relaunching it for another round and it would be great to see you there.

See you in the WebRTC webinar.