WebRTC is fast becoming the default protocol for low latency video transmission in the web browser. People are building all kinds of innovative apps that leverage the technology in creative ways. But many people think of WebRTC as just a Peer to Peer (P2P) technology, and while that’s technically correct (you always need at least… Continue reading Top Five Use Cases Where P2P with WebRTC Falls Short

WebRTC is fast becoming the default protocol for low latency video transmission in the web browser. People are building all kinds of innovative apps that leverage the technology in creative ways. But many people think of WebRTC as just a Peer to Peer (P2P) technology, and while that’s technically correct (you always need at least two peers to communicate), there are many scenarios where you would want to put a server in the middle. Our company built Red5 Pro as a WebRTC server platform that enables streams to be pulled from broadcasters and redistribute to subscriber peers at scale. Since our platform allows for a great variety of set-ups, we’ve seen a lot of the use cases for why people come to us. Here are a few of the common ones that stand out.

One to Many Broadcasts

As you can probably imagine this is one of the largest use cases for a server in the middle implementation of WebRTC. The majority of Red5 Pro clients are focused on this kind of scenario. P2P for broadcasting generally doesn’t work since you need one broadcaster peer to distribute out to each of the remote peers which could end up being in the millions. Almost all designs for this sort of delivery (think traditional CDNs) have leveraged a client-server model, and the reason for this makes perfect sense. You can’t negotiate connections one at a time between one broadcaster peer and all the remote viewers once you get into the hundreds, much less millions. The broadcaster would simply get flooded with millions of requests all at once. You are much better off moving that pairing to a server infrastructure, where you can more easily scale it to handle all those connections.

You might have noted that I’m using the term “server” a bit loosely here. As with any broadcast of any scale you need to offload the traffic to a clustering model like our Autoscale solution. To make matters slightly more confusing, our autoscaled clustering model leverages P2P to connect the server nodes together. Below is a diagram showing the process of spinning up and down edge instances in our Autoscale solution.

I would also be doing our readers a disservice if I didn’t mention that I’ve seen some interesting approaches with P2P in broadcast scenarios where they are offloading some of the delivery responsibility to other peers in a mesh-style network over WebRTC. This works well if you can deal with a nice size buffer and you don’t care much about latency. There’s still a server streaming the content (or acting as a middle man for live ingests) involved in this setup. VOD delivery in this P2P scenario makes a lot of sense, as it reduces the bandwidth hosting costs. However, for the majority of our customer’s use cases, live streaming and low latency are a huge factor, so we haven’t taken the P2P approach for one to many broadcasts with Red5 Pro… well at least not yet.

Recordings

Another common use case where P2P breaks down is video recordings. If you want an archive of that video broadcast you have a couple of choices: One, record that stream locally in the browser client using the Web Storage API, or two, have a server take care of recording the stream. The first solution works well in some simple cases where you only want to store one of the streams, but in other cases, you might have a multiparty chat going on, and you want to record the entire conversation. In that case, it would be best to combine all the streams into one with a transcode process after the session has ended. It’s a much cleaner solution to already have the recordings on the server versus having to upload them and then do the post-processing. Another reason not to choose local storage for the recordings is that Browsers have a limit to how much can be put there, and it’s not guaranteed to be stored there permanently.

Compatibility with Other Formats

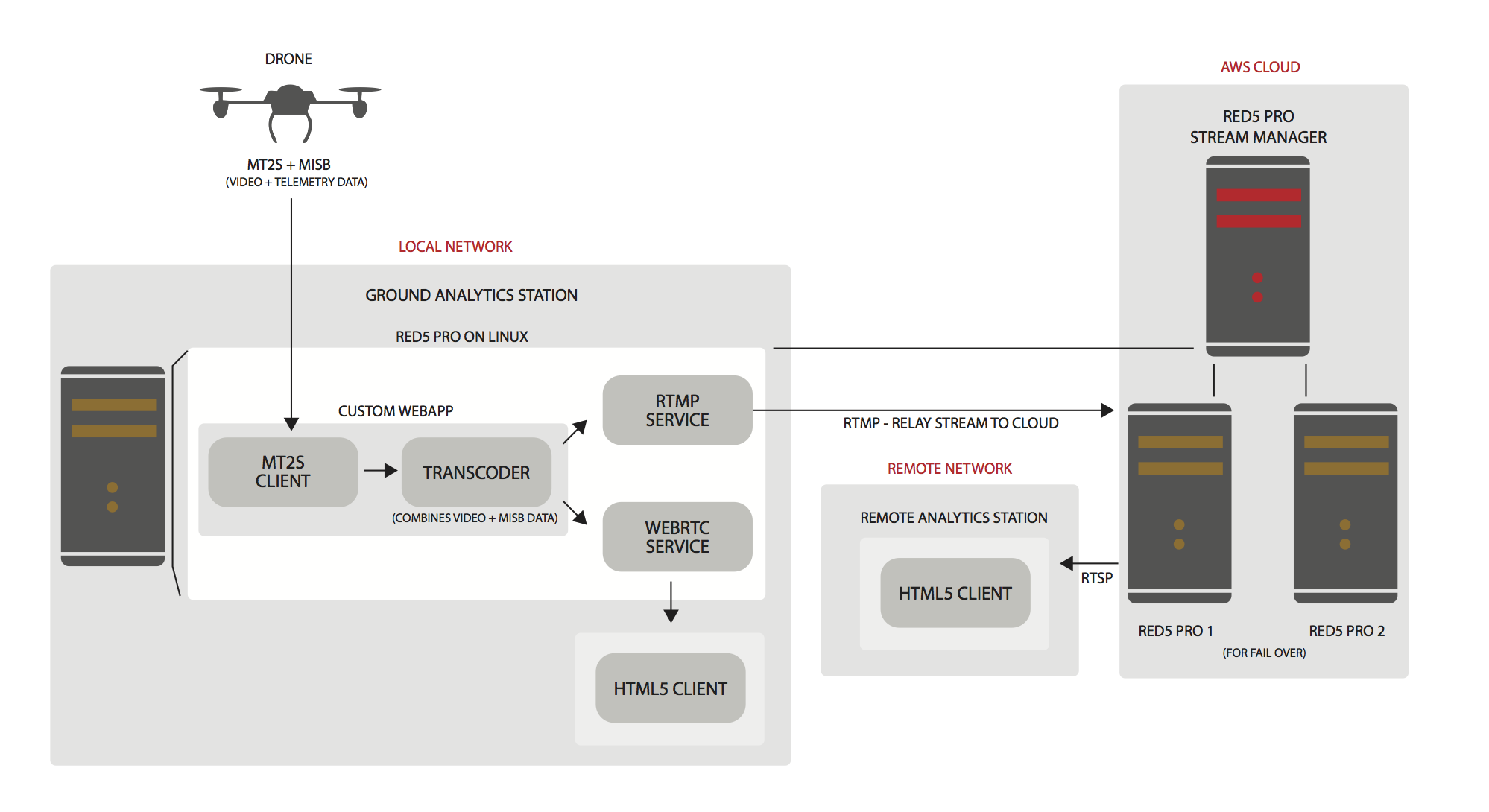

Traditional phone systems, IoTV (Internet of Things Video) devices, mobile browsers (HLS), and a whole lot of other scenarios require that you transcode the stream into various other protocols. P2P with WebRTC doesn’t handle transcoding, so the only solution to get around this is to put a server in the middle. We are seeing all kinds of use cases that involve a live transcode to other formats like pulling in RTSP streams from security cameras to then be displayed in real-time in the browser, or outfitting live RTSP streams coming from body cams, and M2TS streams from drones for first responders to view from a command center. Below is a diagram of one such deployment.

Another situation is allowing communication through SIP for communication with traditional phone systems like those used in call centers. Although I think the days are numbered, believe it or not, some people still use traditional phones. In fact, companies like Twillio have even made entire businesses of enabling this very use case.

Multiparty Calls

When creating a video chat application where you start to involve any more than four simultaneous connections, you are going to want to put a server in the middle. The reason for this, is, that if every user has to make connections with to each other to send and receive the video, the number of connections per client adds up quickly. So that’s two connections (incoming and outgoing streams) multiplied by each client that connects to the video call. On mobile devices where you have even less power, even four connections can be problematic. Thus, it helps tremendously to send through a server.

The preferred method is for the server to act as a Selective Forwarding Unit or SFU for short. With the server in the middle acting as the SFU, this reduces the number of outgoing streams to one per client. Red5 Pro acts both as an SFU and a Media Server which allows for this solution as well as large broadcasting and transcoding. Because of the flexibility of Red5 Pro, we’ve been seeing some very interesting hybrid apps where multiparty video chat is part of a very large broadcast. Below is a deployment diagram of one such app that allows celebrities of a TV show to stream to their fans and have live conversations with someone from the audience.

If you are curious about your choices for Multiparty Video chat and why an SFU wins out, I highly recommend you read Tsahi Levent-Levi’s article on the subject.

Firewall Issues

Reliability in terms of a video streaming platform is essential, and in many P2P WebRTC video calling solutions we see as much as a 10% fail rate. From our experience, this is mostly due to trouble getting through firewalls. While ICE and its subsets of STUN and TURN deal with a lot of getting through network firewalls for 95% of the cases, reliably getting through to the other caller is still a very common complaint that we hear from our customers. Putting a server in the middle reduces NAT punch through issues by simply having the server peer completely open to the public internet. With the server in the middle setup, there are no worries about getting through to that side of the equation. Most networks are configured to allow access to outside servers with no problems because this is the design of the traditional Internet (client/server model).

Conclusion

As you can see there are many scenarios where a P2P model breaks down, and you need to introduce a server in the middle. We’ve been looking for other ways to make it easy for WebRTC developers to build these kinds of apps that require a server. We would love to know if you can think of other use cases where this is important. Let us know in the comments if you have other examples or other things you think Red5 Pro should do.