Tsahi Levent-Levi wrote a great post today on the state of live broadcast applications that leverage WebRTC. He correctly identified that many people use WebRTC for the broadcaster, but few use it for the subscribers/viewers of the live streams. HLS and MPEG-DASH introduce latency, while RTMP uses Flash; none of these are great for the… Continue reading WebRTC Live Streaming: Why latency is important.

Tsahi Levent-Levi wrote a great post today on the state of live broadcast applications that leverage WebRTC. He correctly identified that many people use WebRTC for the broadcaster, but few use it for the subscribers/viewers of the live streams. HLS and MPEG-DASH introduce latency, while RTMP uses Flash; none of these are great for the user experience. He then goes on to say that he believes the future of live streaming apps will include low latency viewer clients based on WebRTC. In general, I think he is correct, and it’s one of the main reasons we built Red5 Pro the way we did.

WebRTC Guru Chad Hart pulled me into a Twitter conversation about the subject, and I figured I would write a post on my thoughts on the subject. Thanks for alerting me to this Chad!

@murillo @HCornflower curious to hear what @mrchrisallen of @infrared5 thinks on this: WebRTC vs. RTMP vs. HLS..

— Chad Hart (@chadwallacehart) February 18, 2016

First let’s look at the protocols Tsahi mentioned quickly:

- WebRTC – Low latency protocol, built on open standards, uses SRTP for transport, works in all browsers.

- RTMP – Low latency TCP-based protocol originally built for Flash. Requires Flash player to run in the browser. (note, Tsahi got it wrong that this one is a high latency protocol)

- HLS – High latency, non standard Apple-backed protocol

- MPEG-DASH – High latency Google-backed web standard, very similar and has many of the same faults as HLS

This is a good run down of the main protocols used in live streaming products. As you can see, the only two that deal well with low latency are RTMP and WebRTC. While RTMP has many solutions exist for scaling Flash based live streaming apps, unfortunately few have figured out how to scale WebRTC in this manner.

Scaling

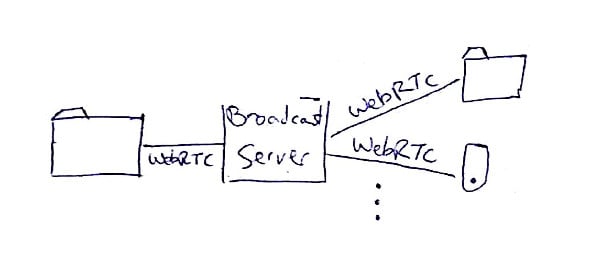

While Tsahi simplifies his diagram a bit by showing one media server here:

the truth of the matter is, to do any live broadcast at scale, you need multiple servers to handle the load.

CDNs or Cloud Providers

Many people today direct their streams at CDNs (Content Delivery Networks) for this. Others, including Facebook, are looking at large scale clustering (Facebook)?. Although, if you look closely at Facebook’s implementation, they still haven’t figured out how to stream the low latency browser protocol at scale. They are using RTMP. Truth be told, CDNs are just clustered servers running on their own backbone and hardware too. Facebook just made their own CDN.

Most commercial CDNs will allow you to stream RTMP live to Flash clients, but they tend to do this at a premium. HLS has become the default standard with CDNs because it’s HTTP based, easy to work with in terms of scaling, and they sell it cheaper. Still, I think CDNs are pretty much antiquated at this point. With so many easy to use cloud networks like AWS and Google Compute, why shouldn’t the developer of an application simply deploy it on the infrastructure that they choose? This is our philosophy with Red5 Pro, and why we developed our clustering solution to work on any cloud network. I actually can’t wait to share what we are working on next with our cloud clustering solution! Stay tuned for that.

It’s Not All WebRTC or Go Home

I also love this tweet today from Sergio Garcia Murillo:

@chadwallacehart @HCornflower @mrchrisallen @infrared5 btw, just a reminder, ZERO support for webrtc on IE/iphones/ipads. You need HLS/RTMP.

— Sergio GarciaMurillo (@murillo) February 18, 2016

Sergio is right, you can’t completely rely on WebRTC in all environments today, but that fact doesn’t mean you can’t develop low latency based streaming solutions. With Red5 Pro (or other tools) you can actually work with the legacy browsers through RTMP/Flash, develop native apps for mobile–and coming soon–you will be able to stream to WebRTC based clients.

Summing it all up

WebRTC is awesome technology, and it has a huge future in the live broadcast/streaming space. WebRTC will play a major role in the future as more and more browsers adopt the standard. For now, we have to be partial and rely on other protocols where appropriate.

Finally, one of my favorite statements Tsahi made in the post is this:

To build such a thing, one cannot just say he wants low latency broadcast capabilities. Especially not if he is new to video processing and WebRTC.

The only teams that can get such a thing built are ones who have experience with video streaming, video conferencing and WebRTC – that’s three different domains of expertise. While such people exist, they are scarce.

Luckily we are such a team, and providing low latency live broadcasts at scale is one of the main reasons we built Red5 Pro. We want to make it super easy for any developer to make these kinds of experiences regardless of your background. Please let us know your thoughts. What do you think WebRTC’s role is in Live Streaming today and in the future?