Do you enjoy waiting? Of course not. Nobody does. Which is exactly why no one likes latency. Before we get too far, what is latency? Latency, according to how we like to define it, is the time it takes video (captured by the camera) to travel across the internet to actually appear to the person… Continue reading Why is My Latency so High: 9 Reasons Why

Do you enjoy waiting? Of course not. Nobody does. Which is exactly why no one likes latency.

Before we get too far, what is latency?

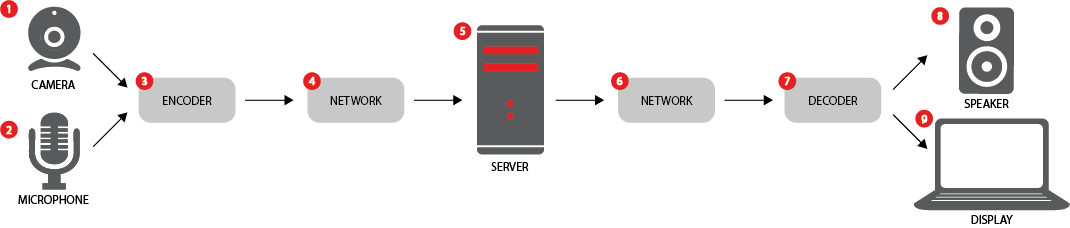

Latency, according to how we like to define it, is the time it takes video (captured by the camera) to travel across the internet to actually appear to the person watching that video. As one can imagine, there are all kinds of transcoding, encoding, recoding and otherwise-ing happening from the first point of capture to the point of viewing.

Accordingly, we’ve gone to tremendous strides to bring this amount of time as low as possible, and in this post, we would like to delve into each of the specific areas that will add to latency:

1. Video Capture

Believe it or not, it takes time to capture the video from a camera and encode it. This involves converting the light taken by the camera into pixels so that it can become a stream of data and then compressing that into a video file codec. This entire process typically takes .75ms.

2. Audio Capture

Same thing as above, only this time it’s converting acoustical energy (sound waves) into electrical energy (the audio signal) which is stored in bytes and then compressed into an audio file codec.

Streaming to the Server:

3. The Protocol

After the audio and video have been captured, they are encoded into streaming protocols so that they can be sent over the network.

RTMP was designed specifically for low-latency and remains a popular choice for live-streaming applications. While Red5 Pro supports RTMP, that is not the only choice. Other supported ingress options, such as RTSP and WebRTC, feature an equally low latency with the Red5 Pro implementation.

We think that RTMP for ingest streams will eventually be phased out and replaced with a more modern protocol for low latency streaming. WebRTC is a logical choice as it utilizes encryption (DTLS/SRTP) by default and was built from the ground up for low latency video communication. Haivision’s SRT initiative is another promising UDP based protocol for live streams. It will be interesting to follow and see what kind of traction it gets.

4. Network Latency

The chosen protocol will then travel across the network, a section often blamed for slow speeds. Rightfully so, considering the amount of processing that goes on there.

This includes your network connection, plus the internet routing, and the server’s network (not to mention all the parts in-between).

5. Server-Side Processing

There are a number of optimizations for ensuring that the threading model and code that governs server-side processing runs as efficiently as possible. This remains vital as there can be a potential delay of 16ms to 1sec at this stage.

In Red5 Pro’s case, we take care of repackaging the raw h.264 codec into the various transport protocols. In some cases, we have to transcode either the audio and or the video too. Google and Firefox, for example, don’t allow for AAC over WebRTC, so we need to transcode to Opus in order for audio to work with those streams.

Streaming to the Client:

6. The Protocol

Essentially the same as above but in reverse (not encoding but decoding). The protocol must be unpackaged so that the video and audio codecs can be processed. This takes about .75ms, a little bit of time but not too much.

7. Network Latency

Again, same as above but in reverse.

8. Rendering the Client Side Audio

The audio file must be translated into sound and sent out the speakers

9. Rendering the Client Side Video

This includes decoding the stream and rendering via the graphics card (or software rendering in some cases), ensuring that the video is presented as intended.

Every step in this process entails a bit of processing, adding a little bit of time to the stream. Eventually, those microseconds add up, producing latency. Though it sounds simple to display a bunch of pixels on a screen or render audio through a speaker, in reality, it’s much more involved than that.

Fortunately, you don’t have to worry about everything that goes on behind the scenes of your live-stream. We’ve already thought about it and continue to work to decrease the latency.

Will we ever get it down to zero?

Probably not, but when you consider that it takes about 20ms for you to move your arm, it might just be unavoidable. Information travels at very high speeds, but it still has to travel. That traveling, and the inevitable processing that comes with it, remains an obstacle to 0-second latency (for now at least).

In the meantime, there’s Red5 Pro’s sub-500ms live-streaming solution. Check it out for yourself and give us a call.